Ansible Playbooks

In this section we will take a look at the main reason that I can see at least for Ansible, I mean it is great to take a single command and hit many different servers to perform simple commands such as rebooting a long list of servers and saving the hassle of having to connect to each one individually.

But what about actually taking a bare operating system and declaring the software and services we want running on that system and making sure they are all running in that desired state.

This is where ansible playbooks come in. A playbook enables us to take our group of servers and perform configuration and installation tasks against that group.

Playbook format

Playbook > Plays > Tasks

For anyone that comes from a sports background you may have come across the term playbook, a playbook then tells the team how you will play made up of various plays and tasks if we think of the plays as the set pieces within the sport or game, and the tasks are associated to each play, you can have multiple tasks to make up a play and in the playbook, you may have multiple different plays.

These playbooks are written in YAML (YAML ain’t markup language) you will find a lot of the sections we have covered so far especially Containers and Kubernetes to feature YAML formatted configuration files.

Let’s take a look at a simple playbook called playbook.yml.

- name: Simple Play

hosts: localhost

connection: local

tasks:

- name: Ping me

ping:

- name: print os

debug:

msg: "{{ ansible_os_family }}"

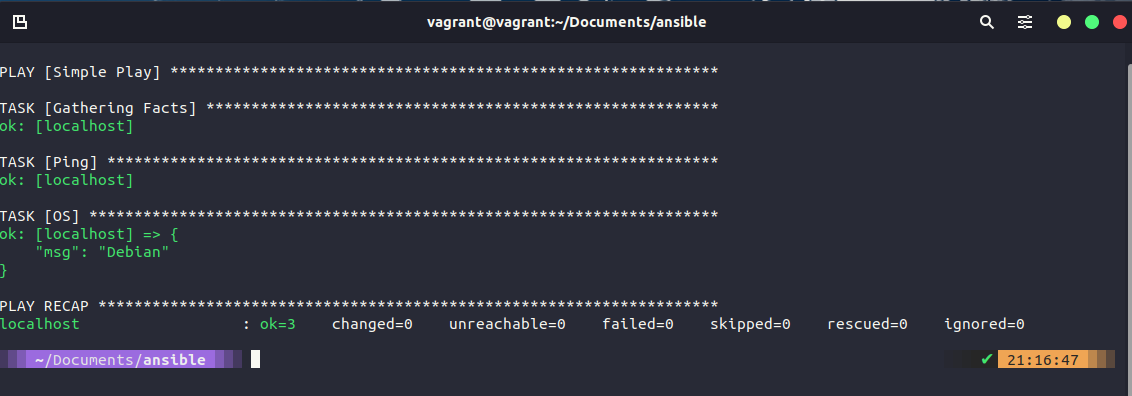

You will find the above file simple_play. If we then use the ansible-playbook simple_play.yml command we will walk through the following steps.

You can see the first task of "gathering steps" happened, but we didn't trigger or ask for this? This module is automatically called by playbooks to gather useful variables about remote hosts ansible.builtin.setup

Our second task was to set a ping, this is not an ICMP ping but a python script to report back pong on successful connectivity to remote or localhost ansible.builtin.ping

Then our third or our second defined task as the first one will run unless you disable was the printing of a message telling us our OS. In this task we are using conditionals, we could run this playbook against all different types of operating systems and this would return the OS name. We are simply messaging this output for ease but we could add a task to say something like:

tasks:

- name: "shut down Debian flavoured systems"

command: /sbin/shutdown -t now

when: ansible_os_family == "Debian"

Vagrant to set up our environment

We are going to use Vagrant to set up our node environment, I am going to keep this at a reasonable 4 nodes but you can hopefully see that this could easily be 300 or 3000 and this is the power of Ansible and other configuration management tools to be able to configure your servers.

You can find this file located here (Vagrantfile)

Vagrant.configure("2") do |config|

servers=[

{

:hostname => "db01",

:box => "bento/ubuntu-21.10",

:ip => "192.168.169.130",

:ssh_port => '2210'

},

{

:hostname => "web01",

:box => "bento/ubuntu-21.10",

:ip => "192.168.169.131",

:ssh_port => '2211'

},

{

:hostname => "web02",

:box => "bento/ubuntu-21.10",

:ip => "192.168.169.132",

:ssh_port => '2212'

},

{

:hostname => "loadbalancer",

:box => "bento/ubuntu-21.10",

:ip => "192.168.169.134",

:ssh_port => '2213'

}

]

config.vm.base_address = 600

servers.each do |machine|

config.vm.define machine[:hostname] do |node|

node.vm.box = machine[:box]

node.vm.hostname = machine[:hostname]

node.vm.network :public_network, bridge: "Intel(R) Ethernet Connection (7) I219-V", ip: machine[:ip]

node.vm.network "forwarded_port", guest: 22, host: machine[:ssh_port], id: "ssh"

node.vm.provider :virtualbox do |v|

v.customize ["modifyvm", :id, "--memory", 2048]

v.customize ["modifyvm", :id, "--name", machine[:hostname]]

end

end

end

end

Use the vagrant up command to spin these machines up in VirtualBox, You might be able to add more memory and you might also want to define a different private_network address for each machine but this works in my environment. Remember our control box is the Ubuntu desktop we deployed during the Linux section.

If you are resource contrained then you can also run vagrant up web01 web02 to only bring up the webservers that we are using here.

Ansible host configuration

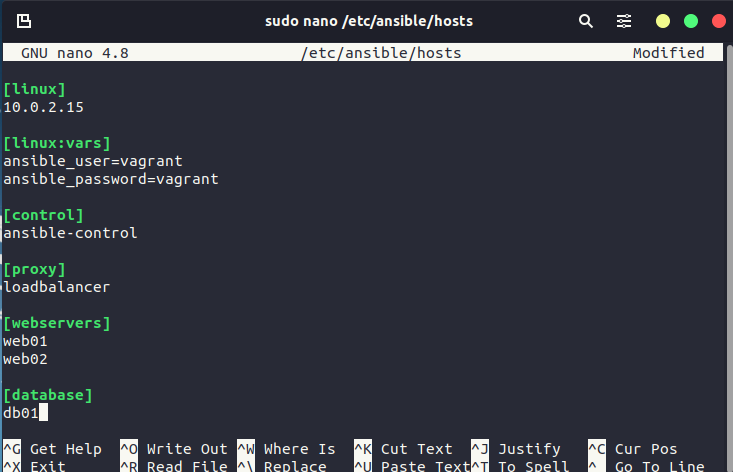

Now that we have our environment ready, we can check ansible and for this, we will use our Ubuntu desktop (You could use this but you can equally use any Linux-based machine on your network access to the network below) as our control, let’s also add the new nodes to our group in the ansible hosts file, you can think of this file as an inventory, an alternative to this could be another inventory file that is called on as part of your ansible command with -i filename this could be useful vs using the host file as you can have different files for different environments, maybe production, test and staging. Because we are using the default hosts file we do not need to specify as this would be the default used.

I have added the following to the default hosts file.

Before moving on we want to make sure we can run a command against our nodes, let’s run ansible nodes -m command -a hostname this simple command will test that we have connectivity and report back our host names.

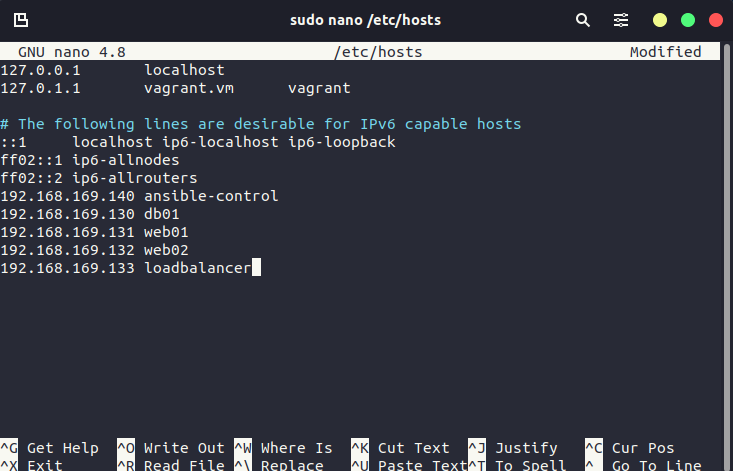

Also, note that I have added these nodes and IPs to my Ubuntu control node within the /etc/hosts file to ensure connectivity. We might also need to do an SSH configuration for each node from the Ubuntu box.

192.168.169.140 ansible-control

192.168.169.130 db01

192.168.169.131 web01

192.168.169.132 web02

192.168.169.133 loadbalancer

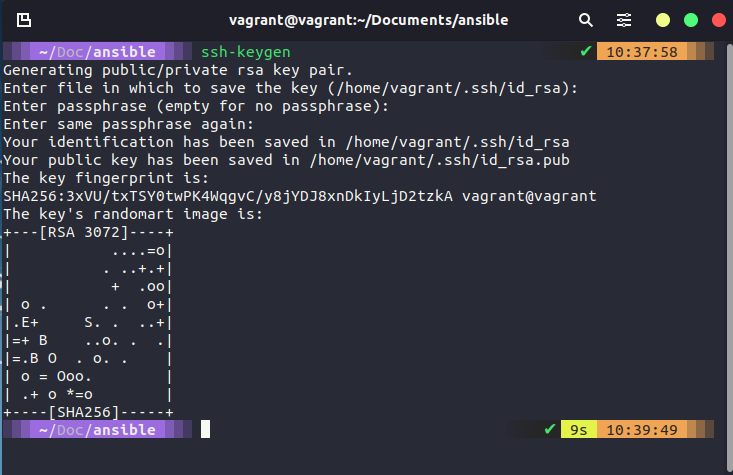

At this stage, we want to run through setting up SSH keys between your control and your server nodes. This is what we are going to do next, another way here could be to add variables into your host's file to give username and password. I would advise against this as this is never going to be a best practice.

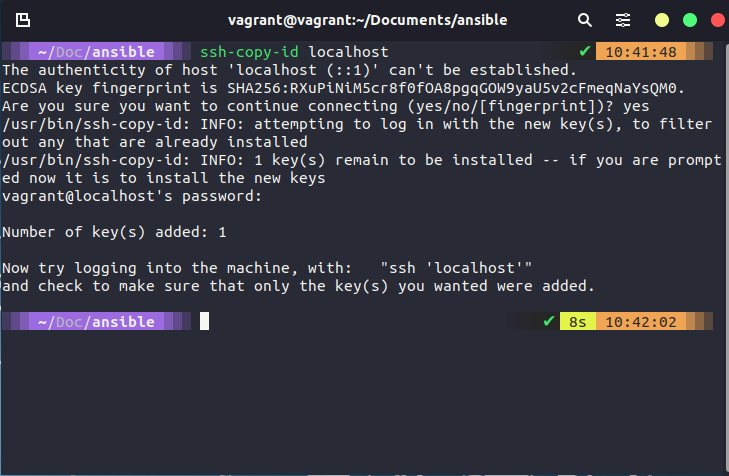

To set up SSH and share amongst your nodes, follow the steps below, you will be prompted for passwords (vagrant) and you will likely need to hit y a few times to accept.

ssh-keygen

ssh-copy-id localhost

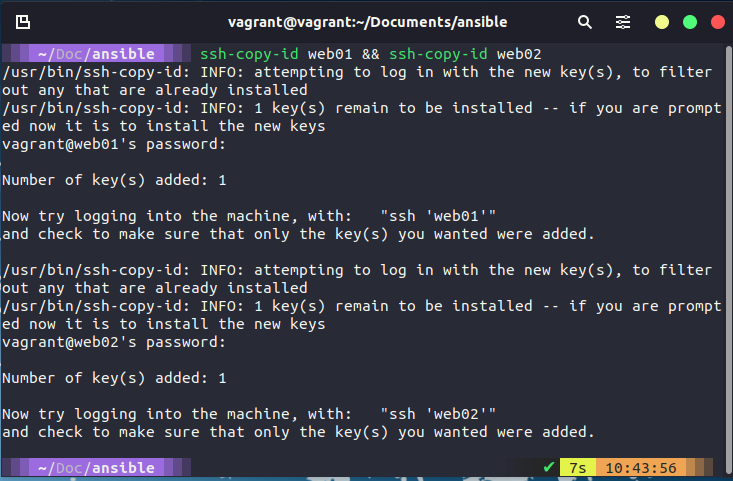

Now if you have all of your VMs switched on then you can run the ssh-copy-id web01 && ssh-copy-id web02 && ssh-copy-id loadbalancer && ssh-copy-id db01 this will prompt you for your password in our case our password is vagrant

I am not running all my VMs and only running the webservers so I issued ssh-copy-id web01 && ssh-copy-id web02

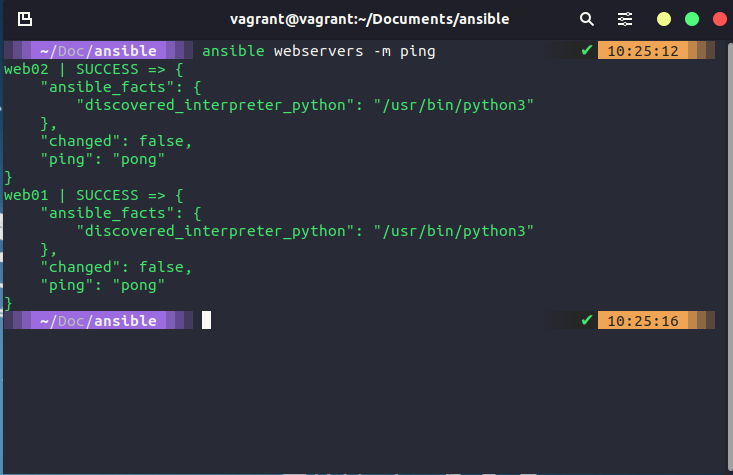

Before running any playbooks I like to make sure that I have simple connectivity with my groups so I have run ansible webservers -m ping to test connectivity.

Our First "real" Ansible Playbook

Our first Ansible playbook is going to configure our web servers, we have grouped these in our host's file under the grouping [webservers].

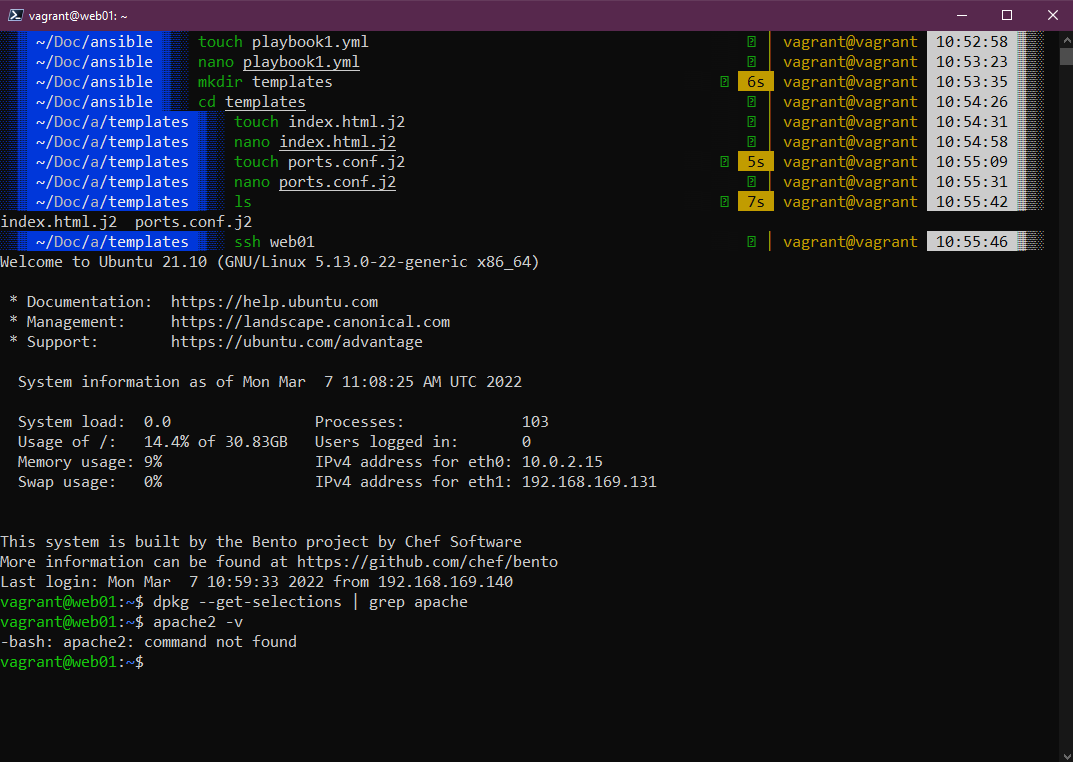

Before we run our playbook we can confirm that our web01 and web02 do not have apache installed. The top of the screenshot below is showing you the folder and file layout I have created within my ansible control to run this playbook, we have the playbook1.yml, then in the templates folder we have the index.html.j2 and ports.conf.j2 files. You can find these files in the folder listed above in the repository.

Then we SSH into web01 to check if we have apache installed?

You can see from the above that we have not got apache installed on our web01 so we can fix this by running the below playbook.

- hosts: webservers

become: yes

vars:

http_port: 8000

https_port: 4443

html_welcome_msg: "Hello 90DaysOfDevOps"

tasks:

- name: ensure apache is at the latest version

apt:

name: apache2

state: latest

- name: write the apache2 ports.conf config file

template:

src: templates/ports.conf.j2

dest: /etc/apache2/ports.conf

notify:

- restart apache

- name: write a basic index.html file

template:

src: templates/index.html.j2

dest: /var/www/html/index.html

notify:

- restart apache

- name: ensure apache is running

service:

name: apache2

state: started

handlers:

- name: restart apache

service:

name: apache2

state: restarted

Breaking down the above playbook:

- hosts: webserversthis is saying that our group to run this playbook on is a group called webserversbecome: yesmeans that our user running the playbook will become root on our remote systems. You will be prompted for the root password.- We then have

varsand this defines some environment variables we want throughout our webservers.

Following this, we start our tasks,

- Task 1 is to ensure that apache is running the latest version

- Task 2 is writing the ports.conf file from our source found in the templates folder.

- Task 3 is creating a basic index.html file

- Task 4 is making sure apache is running

Finally, we have a handlers section, Handlers: Running operations on change

"Sometimes you want a task to run only when a change is made on a machine. For example, you may want to restart a service if a task updates the configuration of that service, but not if the configuration is unchanged. Ansible uses handlers to address this use case. Handlers are tasks that only run when notified. Each handler should have a globally unique name."

At this stage, you might be thinking that we have deployed 5 VMs (including our Ubuntu Desktop machine which is acting as our Ansible Control) The other systems will come into play during the rest of the section.

Run our Playbook

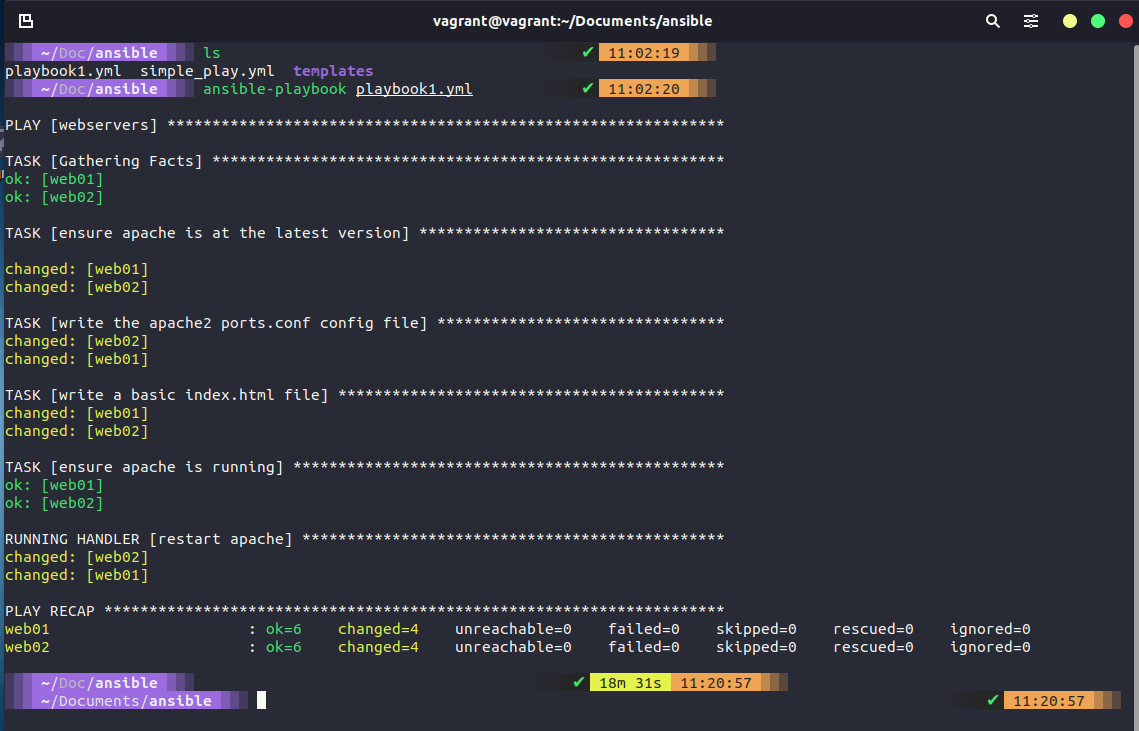

We are now ready to run our playbook against our nodes. To run our playbook we can use the ansible-playbook playbook1.yml We have defined the hosts that our playbook will run against within the playbook and this will walk through the tasks that we have defined.

When the command is complete we get an output showing our plays and tasks, this may take some time you can see from the below image that this took a while to go and install our desired state.

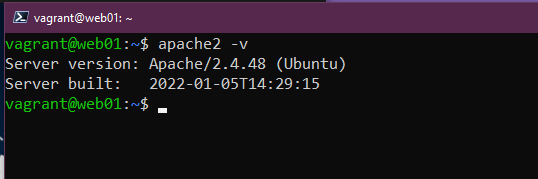

We can then double-check this by jumping into a node and checking we have the installed software on our node.

Just to round this out as we have deployed two standalone webservers with the above we can now navigate to the respective IPs that we defined and get our new website.

We are going to build on this playbook as we move through the rest of this section. I am interested as well in taking our Ubuntu desktop and seeing if we could bootstrap our applications and configuration using Ansible so we might also touch this. You saw that we can use the local host in our commands we can also run playbooks against our local host for example.

Another thing to add here is that we are only really working with Ubuntu VMs but Ansible is agnostic to the target systems. The alternatives that we have previously mentioned to manage your systems could be server by server (not scalable when you get over a large number of servers, plus a pain even with 3 nodes) we can also use shell scripting which again we covered in the Linux section but these nodes are potentially different so yes it can be done but then someone needs to maintain and manage those scripts. Ansible is free and hits the easy button vs having to have a specialised script.